![It's not just time zones and leap seconds. SI seconds on Earth are slower because of relativity, so there are time standards for space stuff (TCB, TGC) that use faster SI seconds than UTC/Unix time. T2 - T1 = [God doesn't know and the Devil isn't telling.] It's not just time zones and leap seconds. SI seconds on Earth are slower because of relativity, so there are time standards for space stuff (TCB, TGC) that use faster SI seconds than UTC/Unix time. T2 - T1 = [God doesn't know and the Devil isn't telling.]](https://imgs.xkcd.com/comics/datetime.png)

ChaosAdventurer

Shared posts

DateTime

ChaosAdventurerDepends on your needed/desired level of precision and accuracy. Much of the time, I can get a number good enough for management, but the variabilities still readily lead to imaginary numbers and degradation of sanity.

![It's not just time zones and leap seconds. SI seconds on Earth are slower because of relativity, so there are time standards for space stuff (TCB, TGC) that use faster SI seconds than UTC/Unix time. T2 - T1 = [God doesn't know and the Devil isn't telling.] It's not just time zones and leap seconds. SI seconds on Earth are slower because of relativity, so there are time standards for space stuff (TCB, TGC) that use faster SI seconds than UTC/Unix time. T2 - T1 = [God doesn't know and the Devil isn't telling.]](https://imgs.xkcd.com/comics/datetime.png)

Here’s why Putin won’t use nukes in Ukraine — Pass it on.

President Putin of Russia has been talking a lot lately about his forces using nuclear weapons — presumably tactical nuclear weapons — in the war with Ukraine. It’s an easy threat to make but a difficult one to follow-through for reasons I’ll explain here in some detail. I’m not saying Mr Putin won’t order nuclear strikes. He might. Dictators do such things from time to time. But if Mr Putin does push that button, I’d estimate there is perhaps a 20- percent chance that nukes will be actually launched and a 100 percent chance that Mr. Putin will end that day with a bullet in his brain.

President Putin of Russia has been talking a lot lately about his forces using nuclear weapons — presumably tactical nuclear weapons — in the war with Ukraine. It’s an easy threat to make but a difficult one to follow-through for reasons I’ll explain here in some detail. I’m not saying Mr Putin won’t order nuclear strikes. He might. Dictators do such things from time to time. But if Mr Putin does push that button, I’d estimate there is perhaps a 20- percent chance that nukes will be actually launched and a 100 percent chance that Mr. Putin will end that day with a bullet in his brain.

Given that I don’t think Mr. Putin really wants a bullet in his brain, my goal here is to lay out facts and probabilities to show how nuking Ukraine would be a huge mistake for Putin and Russia. With the facts thus presented and presumably repeated by many people in many venues, that information will quickly reach everyone in positions to make such a nuclear war NOT happen. But without essays like this one, that education and intervention is much less likely. So I am writing this as a public service. Pass it on.

What do I know? I worked as an investigator for the Presidential Commission on the Accident at Three Mile Island in 1979. Part of my portfolio then was to study the Federal Emergency Management Agency’s response to that nuclear accident, which was pathetic.

TMI was FEMA’s first big crisis as FEMA. Most of the agency had been called Civil Defense until a short time before TMI. Their idea of nuclear safety (remember the Nuclear Regulatory Commission, not FEMA, actually regulates the reactors) had been tracking clouds of predicted fallout from Russian nuclear attacks driven by prevailing winds and coming up with plans to move civilians out of the way of those clouds. In the northeast USA around Three Mile Island, the old Civil Defense plans called for moving 75 million people in 72 hours — an impossible task, then or now.

Think about that task for a moment. In Ukraine so far it has taken three weeks — not three days — to move THREE million people. And this is before any nukes have dropped.

The simple lesson here is that nobody is going to have time to move out of the way of tactical nukes.

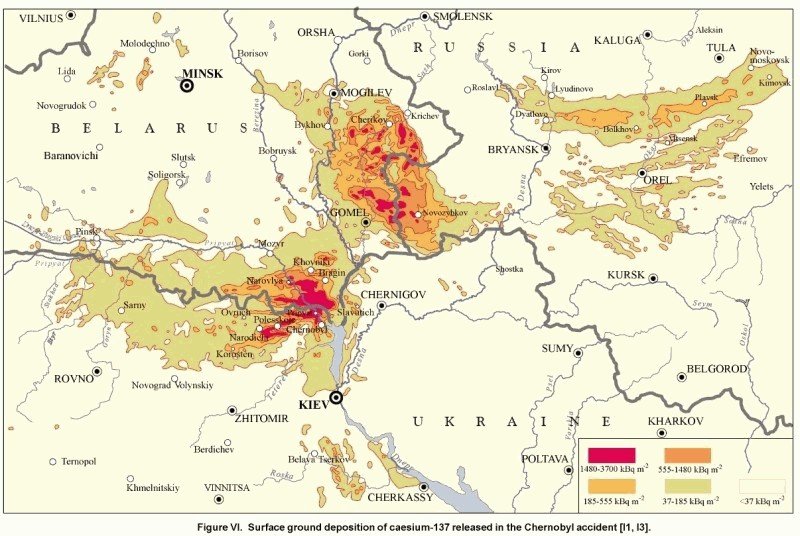

If you are wondering what the damage of such a limited nuclear war would look like, the Chernobyl nuclear accident from 1986 provides a pretty good example, since that disaster site lies between Kiev and Russia and Belarus. Above you’ll find a map showing the Cesium 137 fallout from Chernobyl. If you want to know what bombing Kiev would do, just move the Chernobyl spot down and a little to the right and see where the blotches fall.

But tactical nukes aren’t a nuclear accident, you say, the map would be different. Yes, it would be BIGGER. Chernobyl melted DOWN while bomb and missile and artillery fallout bounce UP into the atmosphere and spread much farther.

Most of the fallout of a Kiev attack, in fact, would land in Russia. The cities of Bryansk (427,000 population), Kaluuga (338,000), Kursk (409,000), Orel (324,000), and Tula (468,000) would all be hit, not by weapon strikes, but by fallout. That’s just under two million people exposed in those five cities, not counting folks in the countryside between.

Two million is approximately the population of Kiev, or was before a lot of those people fled west.

We can estimate civilian deaths from radiation, from heat, from atmospheric over-pressure, but I’ll just jump here to the bottom line that about one million Russians would die from such a nuclear attack, both directly and through greatly increased cancer deaths in later years.

So a nuclear attack on Kiev would kill more Russians than Ukrainians.

Moving the attack to, say, Odessa would kill more Ukrainians, but it would completely destroy the Ukrainian agricultural economy for a century and still kill tens of thousands of Russians.

Now consider the supposed military justification for such an attack. Ukraine clams to have killed 15,000 Russian soldiers while Russia says its losses are more like 1500. I don’t care which number is correct because Putin killing one million of his own people to avenge 1500 or 15,000 deaths makes no sense.

He’s just whining.

The Russians are not evacuating those five cities, so Putin is either willing to lose a million Russian citizens or he has no real intention of launching nukes.

I’m guessing it’s a bluff.

And what if it isn’t a bluff? What if Putin actually goes ahead and pushes that button saying — as bullies are wont to do — “Look what you made me do.”

IF Putin pushes that button, it will set in motion a series of very quick events as half a dozen nations take action not against Russia, but against Putin personally. Navy SEALS and Chinese commandos will fall from the sky, but Putin will already be dead, killed by his own people, whether any nukes are actually launched or not.

Look what he made them do.

The post Here’s why Putin won’t use nukes in Ukraine — Pass it on. first appeared on I, Cringely.

Digital Branding

Web Design Marketing

Comments

- In reply to True Rock. So much for your prediction. Mariupol ... by ITguy

- Dear Bob, If Putin believes that Ukraine couldn't launch a ... by Walt Meservey

- Putin is acting more like Stalin, and Stalin would have no ... by Andrew W Robinson

- Sex Brackel is web platform created for your best sexy chat ... by Sex Brackel

- For trying out the smm panel 2022, be sure to check it out at ... by smm studio

Related Stories

I'm With Her

ChaosAdventurerI didn't realize Americans voted at the Hospital (as the sign indicates, the hospital is over there to the right)

Controlling JavaScript Malware Before it Runs, (Sat, Jun 18th)

ChaosAdventurerGood trick to block some malware, and I am trying to set it to unread but ol

oldreader doesn't want to do that on this droid

Weve posted a number of stories lately about various exploit kits and the malware they post. What ...

Renewables: The Next Fracking?

“Kyle Dine & Friends” Video Premiere – Sept. 20 & 21

Mark your calendars! This upcoming Sunday and Monday you can watch the video debut for free online and chat with Kyle Dine too!

It will be streaming from www.foodallergyvideo.com/premiere.html.

Your Phone Interface is a Legacy Train Wreck

If you were to design a smartphone interface from scratch, without any legacy issues, would it look like a bunch of app icons sitting on a home screen?

No. Because that would be stupid. Would you want your users to be hunting around for the right app every time they want to do simple things? That ruins flow. And it unnecessarily taxes your brain by making you shift your mental model each time you switch apps. You’re always thinking Is this the one with the swiping left or the one that scrolls down?

There is a lot of background processing in your brain just to move from app to app. I sometimes skip simple tasks on my phone because I can’t go app-diving one more time or my head will explode. My brain seems to have a finite capacity within a given day for “hunting for the right app.”

When you have my kind of job, losing flow is devastating. I’m a fan of new technology, but objectively speaking, the smartphone is the biggest threat to creativity since communism. My phone interrupts me all day long. And if I have a new idea that I want to jot down before the next interruption, it is nearly impossible because of the app-hunting legacy model of phones. I usually forget what I was thinking because I get interrupted or my mind moves on before I even decide what app to use for my note.This is all worsened by the fact that modern life is making my attention span shrink to nothing.

So what would a proper smartphone interface look like?

It would be a blank screen. Like this, except with a keyboard at the bottom.

Let’s say, for example, you start typing (or speaking) on the blank screen…

"Kenn…"

Your smartphone starts guessing that you are either writing an email or a text message because “Kenn” is almost certainly short for Kenny and you have been communicating with someone by that name. A hovering menu appears at the top right while you continue, offering you the chance to choose your app (email or text) whenever you please. You can do it now or wait until you stop typing, to preserve flow.

Halfway through your typing, the OS understands that this is probably an email message because your recent messages to Kenny were all email. The OS starts wrapping an email interface around your message as you type. It also automatically attaches your history of email back and forth to your new message, at the bottom.

The idea here is that you start working first, to maintain flow, and only later do you select the app. And by the time you need to select the app, the OS has done a 95% accurate job of doing it for you, so you simply proceed without ever actively selecting the app.

Does this work for all sorts of apps? I haven’t thought through every possibility, but I think so. Let’s see some more examples and I’ll tell you how the OS would guess the right app and auto-surround your work with the most relevant options.

If you type… Then….

———————- ——————————————————-

wea… Local weather info pops up

wel… If Wells Fargo is your bank, the sign-in page

appears

eat A restaurant search app or search engine pops up

saf… Safari browser pops up

Goo… Google search box pops up

Stev… Either text or email (hover menu choice)

Ala… Open alarm clock

tw… Open twitter

My bagel is… Hover menu for Facebook, Twitter,

Pick up… Reminder app opens for your to-do list

Thurs… Your calendar pops up to show next Thursday

Stop saying I am reinventing the DOS operating system. DOS was dumb. The smartphone can see your work as part of a larger context. It will know what you need based on the situation.

Smartphone users are experienced at typing because we do so much texting. We do it quickly and effortlessly. So my suggested blank-screen interface goes with our strengths instead of making you play a game of Where’s Waldo to find the right app before every task.

How did we get the app-centric terrible interfaces of today? I think it goes back to the dawn of personal computers. In those days it was no big deal to first pick the software (Word or Excel) and then spend a few hours within an app doing one task. There was no mental tax involved in switching apps because you only ever used one or two. So the app-first model became normal.

Fast-forward to the original Apple smartphone. The business model required an open market for software providers, and they each got their own little branding, navigation strategies, and real estate on your screen. It works great until you have fifty apps. The app-first interface is a total failure at this point. It works, but the cost is so high I am having legitimate thoughts about abandoning my smartphone for good. (I won’t pull the trigger, but why am I even considering it?)

If you don’t like the blank screen with a keyboard interface, here’s another idea that is better than current phones: Use faces for the interface.

By that I mean my home screen icons should be the faces of people I deal with most often. If the icon with Bob’s face shows a little “2” on it, I know I can click to see two messages from Bob, or perhaps I have one message and one meeting today with Bob, or one task to do for Bob.

The main insight here is that humans reflexively arrange their tasks by the human that benefits from it. Sometimes the human is yourself, so your face is on the front page too. I doubt you can think of a task that does not relate to a specific face in your life.

And finally, a word to current makers of smartphone operating systems. If my OS interrupts me to ask about updating software, you failed. Please keep working on that until you get it right. Make your machine conform to my flow, not the other way around.

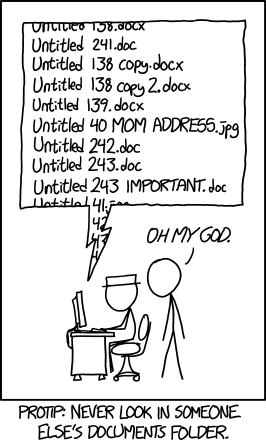

Documents

ChaosAdventurerthe alt tag is an impossible file name as well, too long for Windows or Linux to manage. I do hit this sort of thing at clients' sites all the time.

Han and Cheese – DORK TOWER 12.09.14

HEY, since I have your attention, check out MY INSANE 60-MILE BIKE RIDE, and take a gander at all the cool swag you can get simply for helping a wonderful charity! Including new limericks by Neil Gaiman, Pat Rothfuss that the Doubleclicks will also set to music, and lots more from more bestselling authors than I can shake a deadline at!

A bird on the wall …

ChaosAdventurerAn app to do this would be nice, but just wouldn't have the same impact.

It was mid-afternoon and someone was poking me. “Hey, are you awake?” they whispered. I blinked and raised my chin.

“Huh?”

“Ralph wants to know what database we’ve chosen. What database did we choose?”

“Grughnk. Guhbbah.” I’d nearly fallen asleep in the marathon marketing meeting again. “Um, we decided on, um, Squorical.”

“What?”

“Orasqueakle Server. Yeah, that was it.”

“Oh God.”

Many eyes were on me. Many of the eyes had suits and ties attached to them, and they wore expectant looks. I put down the notebook I’d been doodling satanic cartoons in and lurched from my chair. “Sorry, I’ve got to –” I said, and waved generally toward the door. (“Oraskwackle? Really?”)

Ralph the Marketing Guy said, “Great idea. Ten minute bio-break everyone!” He was chipper and ready for another hour at least. I wanted to throw him off our balcony.

We’d been in that meeting room for nearly two hours, discussing nonsense and useless stuff (along the lines of Douglas Adams: “Okay, if you’re so smart, YOU tell us what color the database tables should be!”) and I needed to be doing designing and coding rather than listening to things that made my shit itch. It was the third multi-hour marathon meeting with Marketing that week, and I was nearly at the end of my rope. One of the of the topics under discussion was why engineering was behind schedule.

—-

Our little group of programmers often walked to lunch. I’m not sure where we went the next day; it might have been to the borderline not-very-good chinese place down the hill that had wonderful mis-spellings on its menu (my favorite dish was Prok Noddles), or it might have been the Insanely Hot chinese place that was in an old IHOP A-frame building (they had a great spicy soup that would spray a perfect Gaussian distribution of hot pepper oil on the paper tablecloth as you ate from the bowl). All I remember for sure is that on the return walk we passed the shops on the street as we always did, but for some reason I paused just outside the clock shop, which I’d never really paid attention to before.

A spark of evil flared in my head. “You guys go on, I’m going in here.” I got a couple of strange looks, but nobody followed me in. I spent about a hundred dollars.

—-

The next morning the marathon meeting started on time, but there was a new attendee. I had nailed it to the wall. It said “Cuckoo!” ten times.

“What is that?” one of the marketeers asked. [This question pretty much defines most marketing for me, by the way].

“Just a reminder,” I said with slight smile and a tone that implied it was no big deal. (I’d talked with our CEO about it earlier and he had said okay, but I think I was going to do it anyway).

The rest of the usual attendees filtered in and the meeting started. The clock said “Bong!” at fifteen minutes past the hour, which got a couple of raised eyebrows, and it bonged again on the half hour and a quarter-to. At 10:59 it made a “brrrr-zup!” sound, as if it was getting ready for some physical effort, then at 11:00 the bird popped out, interrupting a PowerPoint presentation that I’ll never be able to recall, and went “CUCKOO! CUCKOO!” about a million times. Well, only eleven, but it certainly felt like a long, long time. The guy who had been talking had trouble remembering what he’d been saying. Everyone else had been expecting the display, but the speaker, one of our serial meeting-stretchers, had been lost in his own blather.

The meeting broke early that day.

The next day, too.

The hours we spent in that room no longer passed anonymously. The clock’s smaller noises, as it prepared to say “Bong”, and its more dramatic preparations for each hour’s Big Show were now part of the agenda. We knew time was passing. The meetings did become shorter. We had an interesting time explaining the clock to customers (for this was a small company with only a couple of meeting rooms), but in general people from outside understood, and often laughed in approval.

The clock was a relatively cheap model that had to be wound every day. I usually got in to work pretty early and wound it, but occasionally I forgot to, or worked remotely and didn’t get to the office at all. Somehow the clock never ran down. It turned out that our office manager was winding it, and occasionally our CEO would wind it, too.

I knew I’d gotten the message across when one of the marketing guys looked up at the bird (which had just announced 3PM) and said, “I hate that thing.” And I smiled, and the meeting ended soon after, and I got up and left the room and went to my keyboard and wrote some more code.

[I left that start-up a few months later. I still have the clock.]

There are times I do things of dubious value

ChaosAdventurerThe obsolescence of eReaders, Welcome their eventual replacement, the pReader

... and when I do, I share them with you:

Link for those of you who don't want to deal with iframes: https://www.youtube.com/watch?v=DP_1T64XQXA

Learning NVP, Part 5: Creating a Logical Network

I’m back with more NVP goodness; this time, I’ll be walking you through the process of creating a logical network and attaching VMs to that logical network. This work builds on the stuff that has come before it in this series:

- In part 1, I introduced you to the high-level architecture of NVP.

- In part 2, I walked you through setting up a cluster of NVP controllers.

- In part 3, I showed you how to install and configure NVP Manager.

- In part 4, I discussed how to add hypervisors (KVM hosts, in this case) to your NVP environment.

Just a quick reminder in case you’ve forgotten: although VMware recently introduced VMware NSX at VMworld 2013, the architecture of NSX when used in a multi-hypervisor environment is very similar to what you can see today in NVP. (In pure vSphere environments, the NSX architecture is a bit different.) As a result, time spent with NVP now will pay off later when NSX becomes available. Might as well be a bit proactive, right?

At the end of part 4, I mentioned that I was going to revisit a few concepts before proceeding to the logical network piece, but after deeper consideration I’ve decided to proceed with creating a logical network. I still believe there will be a time when I need to stop and revisit some concepts, but it didn’t feel right just yet. Soon, I think.

Before I get into the details on how to create a logical network and attach VMs, I want to first talk about my assumptions regarding your environment.

Assumptions

This walk-through assumes that you have an NVP controller cluster up and running, an instance of NVP Manager connected to that cluster, at least 2 hypervisors installed and added to NVP, and at least 1 VM running on each hypervisor. I further assume that your environment is using KVM and libvirt.

Pursuant to these assumptions, my environment is running KVM on Ubuntu 12.04.2, with libvirt 1.0.2 installed from the Ubuntu Cloud Archive. I have the NVP controller cluster up and running, and an instance of NVP Manager connected to that cluster. I also have an NVP Gateway and an NVP Service Node, two additional components that I haven’t yet discussed. I’ll cover them in a near-future post.

Additionally, to make it easier for myself, I’ve created a libvirt network for the Open vSwitch (OVS) integration bridge, as outlined here (and an update here). This allows me to simply point virsh at the libvirt network, and the guest domain will attach itself to the integration bridge.

Revisiting Transport Zones

I showed you how to create a transport zone in part 4; it was necessary to have a transport zone present in order to add a hypervisor to NVP. But what is a transport zone? I didn’t explain it there, so let me do that now.

NVP uses the idea of transport zones to provide connectivity models based on the topology of the underlying network. For example, you might have hypervisors that connect to one network for management traffic, but use an entirely different network for VM traffic. The combination of a transport zone plus the transport connectors tells NVP how to form tunnels between hypervisors for the purposes of providing logical connectivity.

For example, consider this graphic:

The transport zones (TZ–01 and TZ–02) help NVP understand which interfaces on the hypervisors can communicate with which other interfaces on other hypervisors for the purposes of establishing overlay tunnels. These separate transport zones could be different trust zones, or just reflect the realities of connectivity via the underlying physical network.

Now that I’ve explained transport zones in a bit more detail, hopefully their role in adding hypervisors makes a bit more sense now. You’ll also need a transport zone already created in order to create a logical switch, which is what I’ll show you next.

Creating the Logical Switch

Before I get started taking you through this process, I’d like to point out that this process is going to seem laborious. When you’re operating outside of a CMP such as CloudStack or OpenStack, using NVP will require you to do things manually that you might not have expected. So, keep in mind that NVP was designed to be integrated into a CMP, and what you’re seeing here is what it looks like without a CMP. Cool?

The first step is creating the logical switch. To do that, you’ll log into NVP Manager, which will dump you (by default) into the Dashboard. From there, in the Summary of Logical Components section, you’ll click the Add button to add a switch. To create a logical switch, there are four sections in the NVP Manager UI where you’ll need to supply various pieces of information:

- First, you’ll need to provide a display name for the new logical switch. Optionally, you can also specify any tags you’d like to assign to the new logical switch.

- Next, you’ll need to decide whether to use port isolation (sort of like PVLANs; I’ll come back to these later) and how you want to handle packet replication (for BUM traffic). For now, leave port isolation unchecked and (since I haven’t shown you how to set up a service node) leave packet replication set to Source Nodes.

- Third, you’ll need to select the transport zone to which this logical switch should be bound. As I described earlier, transport zones (along with connectors) help define connectivity between various NVP components.

- Finally, you’ll select the logical router, if any, to which this switch should be connected. We won’t be using a logical router here, so just leave that blank.

Once the logical switch is created, the next step is to add logical switch ports.

Adding Logical Switch Ports

Naturally, in order to connect to a logical switch, you need logical switch ports. You’ll add a logical switch port for each VM that needs to be connected to the logical switch.

To add a logical switch port, you’ll just click the Add button on the line for Switch Ports in the Summary of Logical Components section of the NVP Manager Dashboard. To create a logical switch port, you’ll need to provide the following information:

- You’ll need to select the logical switch to which this port will be added. The drop-down list will show all the logical switches; once one is selected that switch’s UUID will automatically populate.

- The switch port needs a display name, and (optionally) one or more tags.

- In the Properties section, you can select a port number (leave blank for the next port), whether the port is administratively enabled, and whether or not there is a logical queue you’d like to assign (queues are used for QoS; leave it blank for no queue/no QoS).

- If you want to mirror traffic from one port to another, the Mirror Ports section is where you’ll configure that. Otherwise, just leave it all blank.

- The Attachment section is where you “plug” something into this logical switch port. I’ll come back to this—for now, just leave it blank.

- Under Port Security you can specify what address pairs are allowed to communicate with this port.

- Finally, under Security Profiles, you can attach an existing security profile to this logical port. Security profiles allow you to create ingress/egress access-control lists (ACLs) that are applied to logical switch ports.

In many cases, all you’ll need is the logical switch name, the display name for this logical switch port, and the attachment information. Speaking of attachment information, let’s take a closer look at attachments.

Editing Logical Switch Port Attachment

As I mentioned earlier, the attachment configuration is what “plugs” something into the logical switch. NVP logical switch ports support 6 different types of attachment:

- None is exactly that—nothing. No attachment means an empty logical port.

- VIF is used for connecting VMs to the logical switch.

- Extended Network Bridge is a deprecated option for an older method of bridging logical and physical space. This has been replaced by L2 Gateway (below) and should not be used. (It will likely be removed in future NVP releases.)

- Multi-Domain Interconnect (MDI) is used in specific configurations where you are federating multiple NVP domains.

- L2 Gateway is used for connecting an L2 gateway service to the logical switch (this allows you to bring physical network space into logical network space). This is one I’ll discuss later when I talk about L2 gateways.

- Patch is used to connect a logical switch to a logical router. I’ll discuss this in greater detail when I get around to talking about logical routing.

For now, I’m just going to focus on attaching VMs to the logical switch port, so you’ll only need to worry about the VIF attachment type. However, before we can attach a VM to the logical switch, you’ll first need a VM powered on and attached to the integration bridge. (Hint: If you’re using KVM, use virsh start <VM name> to start the VM. Or just read this.)

Once you have a VM powered on, you’ll need to be sure you know the specific OVS port on that hypervisor to which the VM is attached. To do that, you would use ovs-vsctl show to get a list of the VM ports (typically designated as “vnet_X_”), and then use ovs-vsctl list port vnetX to get specific details about that port. Here’s the output you might get from that command:

In particular, note the external_ids row, where it stores the MAC address of the attached VM. You can use this to ensure you know which VM is mapped to which OVS port.

Once you have the mapping information, you can go back to NVP Manager, select Network Components > Logical Switch Ports from the menu, and then highlight the empty logical switch port you’d like to edit. There is a gear icon at the far right of the row; click that and select Edit. Then click “4. Attachment” to edit the attachment type for that particular logical switch port. From there, it’s pretty straightforward:

- Select “VIF” from the Attachment Type drop-down.

- Select your specific hypervisor (must already be attached to NVP per part 4) from the Hypervisor drop-down.

- Select the OVS port (which you verified just a moment ago) using the VIF drop-down.

Click Save, and that’s it—your VM is now attached to an NVP logical network! A single VM attached to a logical network all by itself is no fun, so repeat this process (start up VM if not already running, verify OVS port, create logical switch port [if needed], edit attachment) to attach a few other VMs to the same logical network. Just for fun, be sure that at least one of the other VMs is on a different hypervisor—this will ensure that you have an overlay tunnel created between the hypervisors. That’s something I’ll be discussing in a near-future post (possibly part 6, maybe part 7).

Once your VMs are attached to the logical network, assign IP addresses to them (there’s no DCHP in your logical network, unless you installed a DHCP server on one of your VMs) and test connectivity. If everything went as expected, you should be able to ping VMs, SSH from one to another, etc., all within the confines of the new NVP logical network you just created.

There’s so much more to show you yet, but I’ll wrap this up here—this post is already way too long. Feel free to post any questions, corrections, or clarifications in the comments below. Courteous comments (with vendor disclosure, where applicable) are always welcome!

This article was originally posted on blog.scottlowe.org. Visit the site for more information on virtualization, servers, storage, and other enterprise technologies.

Learning NVP, Part 5: Creating a Logical Network

- Learning NVP, Part 4: Adding Hypervisors to NVP

- Learning NVP, Part 3: NVP Manager

- New User’s Guide to Configuring Cisco MDS Zones via CLI

- Learning NVP, Part 1: High-Level Architecture

- Learning NVP, Part 2: NVP Controllers